Glossaire IA – Partie 3 : Usages, enjeux et éthique de l’intelligence artificielle

L’IA c’est de la technique, des cas d’usage qui révolutionnent certains corps de métiers, mais aussi et surtout des enjeux éthiques !

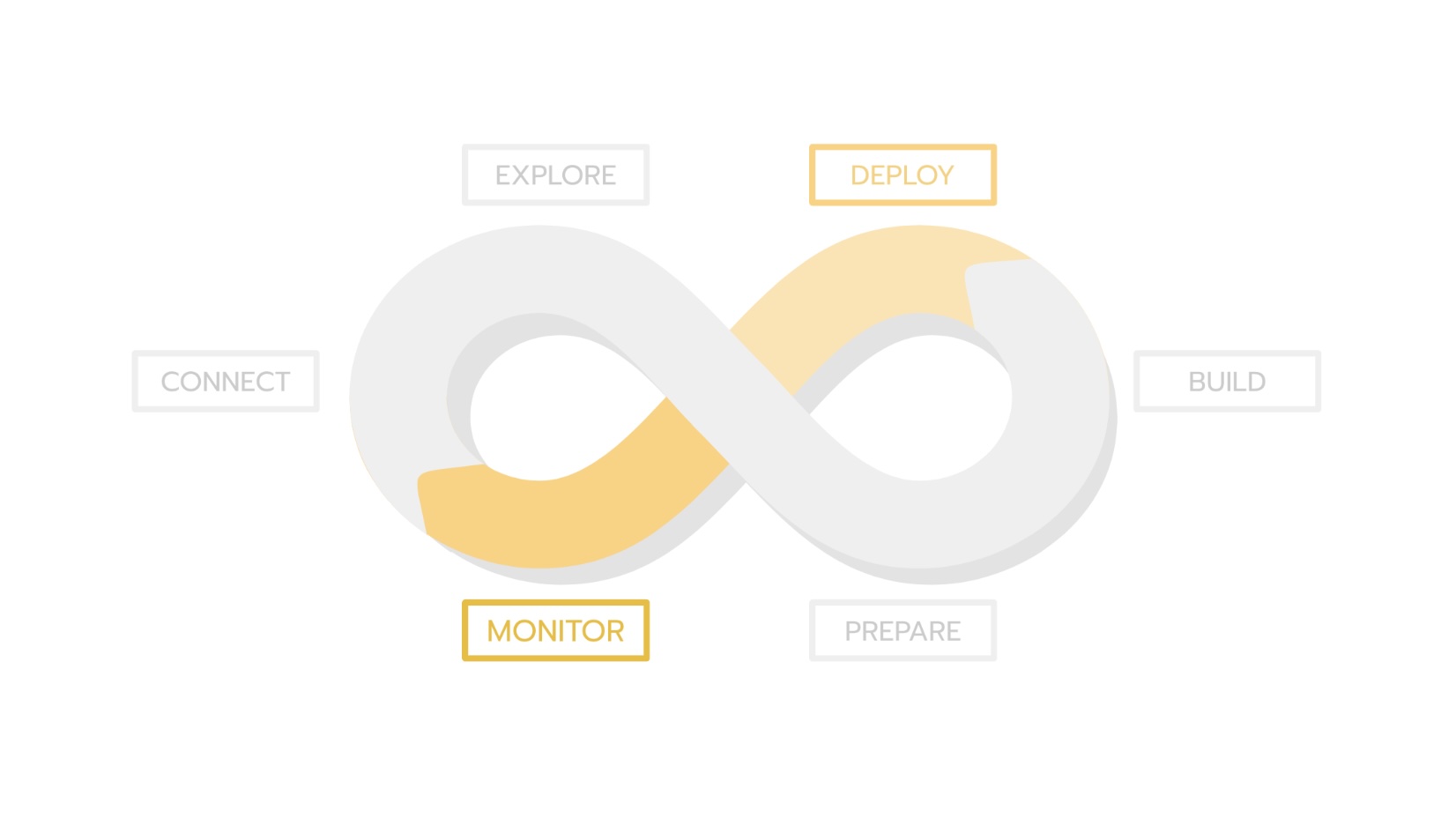

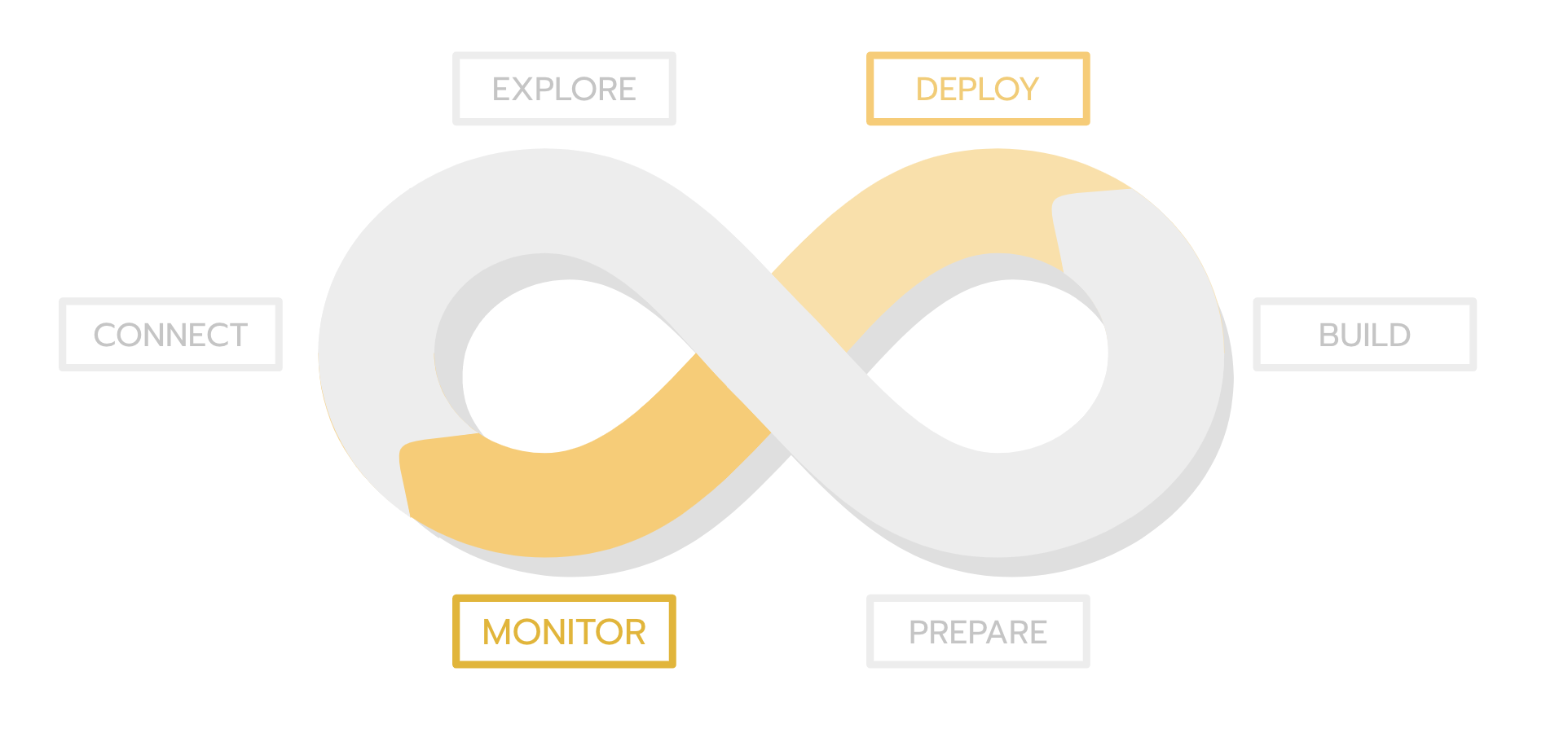

We will try to provide some answers to this questions in two parts. This second article focuses on the first deployment and iterations to quickly improve it while the first one focuses on conception, data collection, exploration and application prototyping.

As we have seen in the previous article (Why MLOps is every data scientist’s dream? Part 1: Starting an AI project), MLOps accelerates the AI application design from its very beginning (infrastructure set-up, data collection and prototyping). However, it also brings tremendous benefits for the users themselves by allowing the data team to deliver and improve an application in a real-life context of use (or in “production”)

The main MLOps feature that unlocks all the others is to give data scientist the ability to deploy their machine learning pipeline. 87% of AI project don’t even make it to production phase and the first goal of MLOps is to make this number smaller and smaller.

There are two main reasons behind this number.

However, deploying an AI application is nowhere near the end of a data scientist’s role in an AI project life cycle. What happens to the model once released? How its performance evolves over time? Does the model need retraining? On which criteria? Are there specific cases in which the performance is low ? Does it sometimes fail and why ? Is it used by the end user ? Will it require bigger machines soon ? If so what is the bottleneck : CPU, RAM, the disk? … These are some of the questions a data scientist has to ask himself to improve an AI application in a meaningful way. To answer all these questions, he needs to monitor a large variety of metrics : model performance metrics, statistics on input data and predictions, usage metrics, execution logs, machine usage… Setting up all this monitoring in can be very challenging and data scientist usually don’t have the expertise or the time to build it. MLOps tools not only allow you to deploy your models easily, it also provides you with all the monitoring you need to make the right decisions. It gives you access to both ready-made metrics like machines usage, but also to custom metrics that you can design to get the most useful insights for your application.

With the ability to deploy and monitor your AI applications in your hands you can now work in fast iterations through the whole machine learning cycle. That is why the primary job of MLOps is to enable data scientists to put their own model into production in a reliable, scalable and easily maintainable way and to make it so easy that it becomes just another data science task.

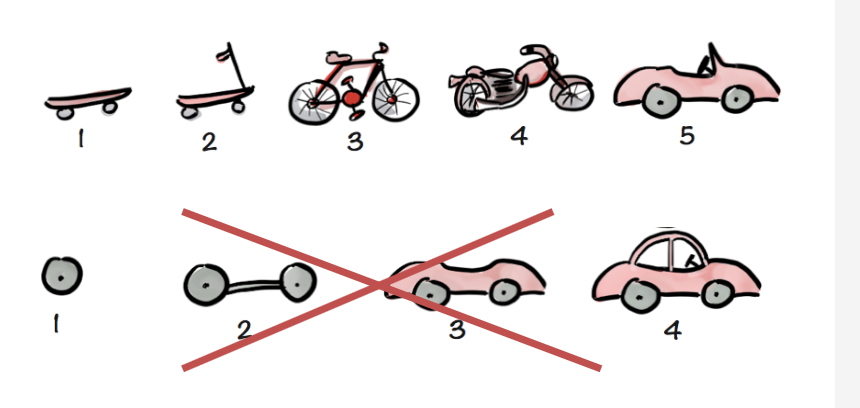

MLOps aligns all stakeholders on a common goal: the delivery of a user-centric AI application that end users can quickly bring into play. Indeed, any AI solution, no matter how technically good it is, that is not used cannot be considered a success. As we have seen previously, ith the automation of repetitive tasks and the ability to reuse previous work, the data scientist can deliver product increments faster and continuously adapt to shifting user needs. MLOps can be seen as an agile framework application on machine learning solutions. For instance, if you need a means of transportation available shortly, maybe getting a bike will be sufficient in the first few weeks. You may not have to wait for a complete and state-of-the-art electric car that would take several years to deliver and whose functionalities would not suit you.

MLOps reduces the duration of iterations on AI projects and puts the end-users at the heart of them. Data scientists no longer focus solely on the performance of their models but also on the design of a comprehensive and user-centric product. The benefits are numerous:

We see here that MLOps brings teams together and breaks down silos. It enables a much more collaborative, iterative way of working, focused on the final value delivered to end-users.

At every stage of the project, MLOps empowers Data Scientist and provide them more autonomy and comfort while realizing their day-today activities. MLOps unlocks a new way of working and provide Data Scientists all the tools they need to reduce frictions and personal frustrations along the difficult road of AI applications delivering.

In addition, it allows them to improve collaboration with every stakeholders, and mostly end-users, and simplify the iteration process in order to build an AI solution centered on the real business needs.

Finally MLOps reduce all the risks associated with the project and make the gap between experimentation and industrialization disappear by allowing Data Scientist to build a solution that is “production-ready” from the start.