Glossaire IA – Partie 3 : Usages, enjeux et éthique de l’intelligence artificielle

L’IA c’est de la technique, des cas d’usage qui révolutionnent certains corps de métiers, mais aussi et surtout des enjeux éthiques !

Quelle place l’explicabilité (XAI) occupe-t-elle aujourd’hui en Machine Learning et en Data Science ? Le challenge de ces dix dernières années en data science, a plutôt été de trouver la bonne “recette algorithmique” pour créer des modèles de ML toujours plus puissants, plus complexes et donc de moins en moins compréhensibles.

Les systèmes d’IA basés sur ces algorithmes émettent souvent des prédictions sans que l’on, concepteur ou utilisateur de l’IA, ne puisse comprendre ce qui motive la prédiction. Ces systèmes sont surnommés “boîtes noires'' du fait de l’opacité du traitement des données qui y est fait.

Le phénomène des boîtes noires et la défiance naturelle qu’elles génèrent est un frein majeur à l’adoption de l’IA. Un effort doit être porté sur la R&D d’une IA de confiance afin de trouver une forme d’explicabilité pour chaque algorithme, même les plus complexes.

Dans les actions à mettre en place pour créer une IA de confiance (Explicabilité / Confidentialité / Frugalité / Équité), l’XAI revêt une importance particulière car elle concerne le modèle dans son ensemble, incluant algorithmes et données. La confiance devient alors la responsabilité du créateur de l’IA, de celui qui a conçu le modèle, qui a choisi l’algorithme.

De nombreux éditeurs de logiciels, dont les solutions sont utilisées avec malveillance, se réfugient derrière l’excuse de la neutralité de leur algorithme. Ils veulent faire reposer la faute sur l’utilisateur final et son usage de l’outil, se dédouanant de toute responsabilité éthique.

Les réseaux sociaux en sont l'exemple le plus éloquent. Ils préfèrent ne pas avoir à expliquer leurs algorithmes, quitte à en perdre le contrôle, plutôt que d’endosser la responsabilité éthique de leur création. La raison même de l’explicabilité est d’empêcher ces dérives et de permettre une IA de confiance.

En plus de ces aspects éthique et réglementaire, l’explicabilité possède surtout un intérêt opérationnel. L’exemple de la voiture autonome est sans doute le plus parlant sur ce que peut et doit apporter l’explicabilité (XAI) à notre quotidien. Que ce soit du point de vue du concepteur ou de l’utilisateur final, l’XAI permet d’accéder aux trois niveaux d’appréhension de l’IA :

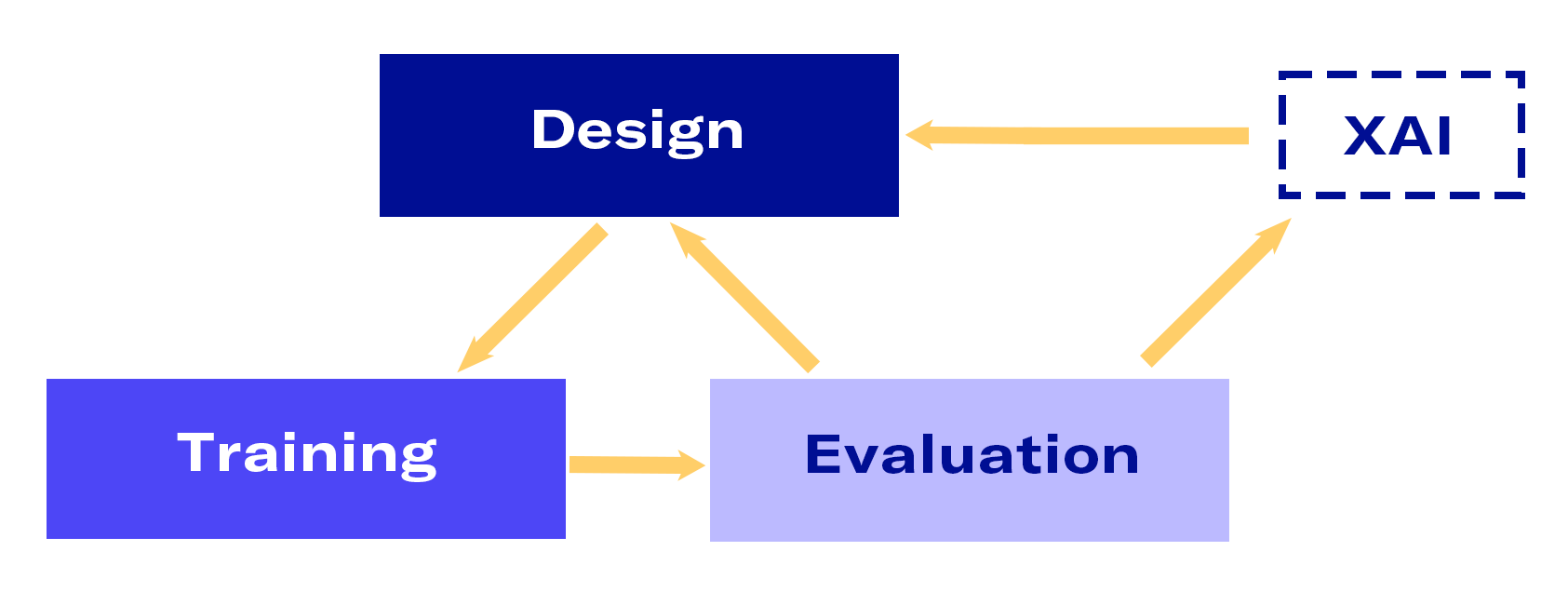

Le processus de création d’un modèle de machine learning est le plus souvent constitué de trois phases : conception, entraînement et évaluation avec une logique itérative jusqu’à “figer” le modèle tel qu’on le souhaite pour le passer en production.

L’explicabilité révèle ici son premier grand intérêt opérationnel. Avec une meilleure compréhension de la recette algorithmique, le concepteur peut choisir de ne pas passer son modèle en production si les explications ne sont pas satisfaisantes, malgré de bons résultats statistiques. Il peut ainsi relancer une itération “conception, entraînement, évaluation” et éviter de déployer une IA incertaine.

L’XAI permet de comprendre ce que l’algorithme fait lors de la phase d'entraînement et offre l’opportunité de :

L’XAI permet de vérifier que le modèle que l’on construit correspond bien à ce que l’on souhaitait modéliser et permet ainsi d'accélérer la phase de développement en aidant les Data Scientist à “figer” le modèle en toute confiance pour le passer en production.

Lors de la phase de déploiement du modèle en production, celui-ci sera confronté aux données dynamiques et il faudra alors de nouveau faire appel à l’explicabilité pour :

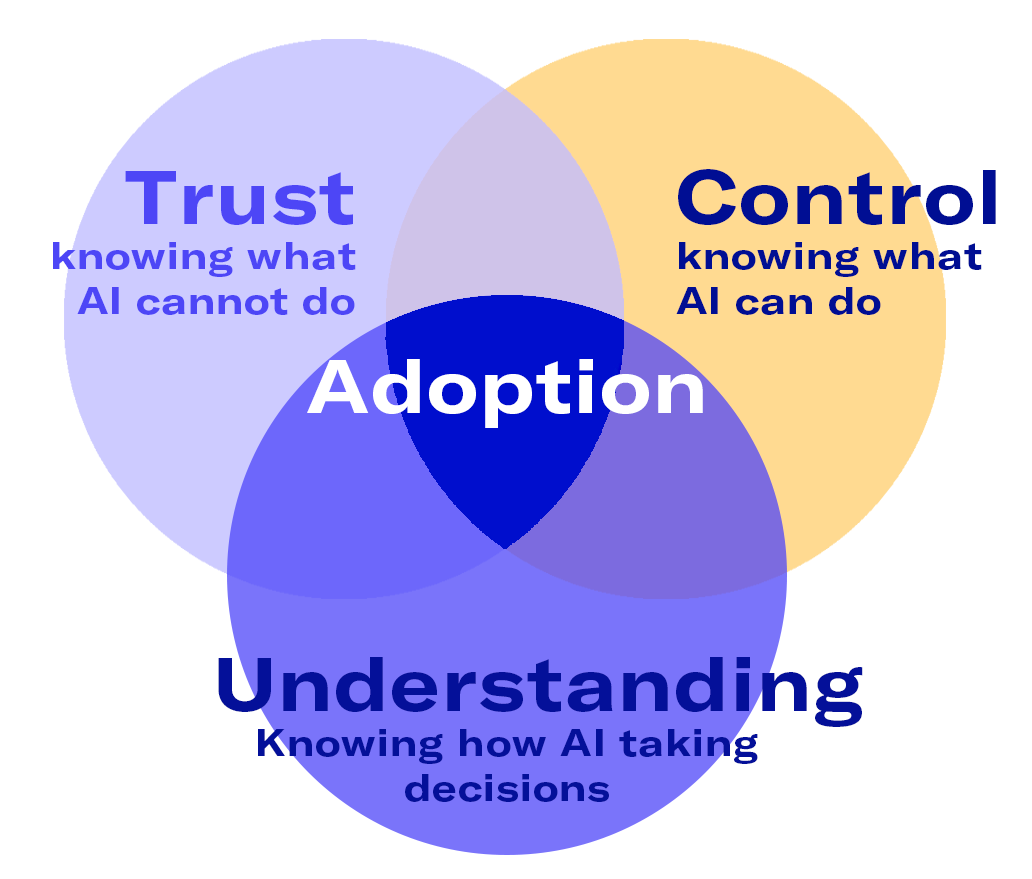

Du point de vue de l’utilisateur final, l’explicabilité recouvre également un intérêt majeur. Nous pensons que l’XAI a un rôle déterminant dans l'adoption de l’IA par le grand public, en permettant une IA de confiance et en fluidifiant l’expérience utilisateur. L’adoption par le plus grand nombre est une étape aujourd’hui indispensable au développement de l’IA.

Si les éditeurs de logiciels veulent continuer à promouvoir l’intelligence artificielle, il leur faut ouvrir la boîte noire, exposer et expliquer ses rouages. Il faut donner des éléments de compréhension à l’utilisateur final pour le maintenir dans la boucle de décision : doit-il ou non suivre les prédictions ou recommandations de l’IA ?

Tout comme pour les concepteurs, les utilisateurs d’IA doivent avoir accès aux trois niveaux d’appréhension de l’IA :

Pour comprendre un service utilisant une intelligence artificielle, chaque utilisateur doit être en mesure de pouvoir remettre en question les décisions prises par l’algorithme :

Il est possible de garder l’humain dans la boucle de l’IA. Cela passe par l’utilisation de méthodes d’XAI.

Pour maîtriser une intelligence artificielle, il faut savoir ce qu’elle est en mesure de faire et comprendre les interactions entre les données et leurs causes :

L’enjeu de la maîtrise de l’IA est de pouvoir créer une complémentarité capacitante entre l’humain et l’algorithme. Renforcer l’humain par la machine ne peut être envisageable que dans le cadre d’une IA de confiance, notamment grâce à l’explicabilité.

L’XAI est le pilier d’une IA de confiance où se retrouve l’équité (supprimer les biais et discriminations présents dans les données) la frugalité (minimiser la quantité d’énergie nécessaire pour entraîner les modèles), et la confidentialité (respecter la vie privée et la confidentialité des données).

La confiance repose sur des preuves tangibles, elle est le résultat de la compréhension et de la maîtrise.

Un modèle de ML se construit sur un ensemble limité de données passées. Avoir des explications sur son fonctionnement permettra d’avoir confiance en son comportement en production, notamment s’il est confronté à des données qu’il n’a jamais vues.

C’est le rôle de l’explicabilité que d’aller au-delà des garanties statistiques pour convaincre le plus grand nombre qu’une IA de confiance, qui garde l’humain au cœur de la technologie, est possible et déjà opérationnelle.

Développer la confiance pour encourager l’adoption est également l’approche retenue par l’Union européenne. Soucieuse de créer un environnement sûr et propice à l’innovation, la Commission européenne a proposé en 2021 une batterie d’initiatives qui contribueront à renforcer l’IA de confiance et faire de l’explicabilité la norme en intelligence artificielle.

Pour en savoir plus sur les engagements et la vision de Craft AI